A post about the speed of the computation of the Ising Model. I compare Python, Cython, Numba, Mojo, & Julia on the Ising Model.

I am sure there are optimizations I missed, e.g. vectorize, parallel, etc. This serves as a comparison of the naive implementation I have blogged about before, plus some tricks that I am aware of.

TL;DR

| Language | Naive Speed | Development Effort Rank |

|---|

| Python | 140ms | 1 |

| Cython | 0.580ms | 5 |

| Numba | 1.1ms | 2 |

| Mojo | 1.27ms | 4 |

| Julia | 0.850ms | 3 |

Python ~ 140ms

Without changing @Jake VanderPlas’s code, lets rerun a vanilla Python for-loop and see how this speed compares with the speed from 2017 in the original blogpost.

1

2

3

4

5

6

7

| import numpy as np

import pandas as pd

import plotly.express as px

import plotly.graph_objects as go

from IPython.display import display, Markdown

np.random.seed(1)

|

1

2

3

4

5

6

7

8

9

10

11

| # Plotting helper functions.

def render_plotly_html(fig: go.Figure) -> None:

"""Display a Plotly figure in markdown with HTML."""

# Ensure frame is square

display(

Markdown(

fig.to_html(

include_plotlyjs="cdn",

)

)

)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| # Functions from Jake VanderPlas's blog post.

def random_spin_field(N, M):

return np.random.choice([-1, 1], size=(N, M))

# Add an `update_fn` arg.

def ising_step(field, beta=0.4):

N, M = field.shape

for n_offset in range(2):

for m_offset in range(2):

for n in range(n_offset, N, 2):

for m in range(m_offset, M, 2):

_ising_update(field, n, m, beta)

return field

def _ising_update(field, n, m, beta):

total = 0

N, M = field.shape

for i in range(n - 1, n + 2):

for j in range(m - 1, m + 2):

if i == n and j == m:

continue

total += field[i % N, j % M]

dE = 2 * field[n, m] * total

if dE <= 0:

field[n, m] *= -1

elif np.exp(-dE * beta) > np.random.rand():

field[n, m] *= -1

|

1

2

| N, M = 200, 200

field = random_spin_field(N, M)

|

1

| %timeit ising_step(field)

|

1

| 140 ms ± 1.74 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)

|

It appears Python has some (2x) inherent speedup over time.

Unrolling For-Loops

For small for-loops (like iterating over the neighbors in _ising_update), we can use a trick called unrolling the for-loop. Basically, we rewrite the for-loop as an explicit calculation. I have seen this used successively in Micropython as well. In Mojo, there is even a for-loop decorator for this. For what follows, we can achieve a 10-20% speedup by rewriting the two most inner for-loops:

1

2

3

| for i in range(n - 1, n + 2):

for j in range(m - 1, m + 2):

...

|

With the unrolled version:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| dE = (

2

* field[n, m]

# Unrolled for-loop over 8 neighbors.

* (

field[(n - 1) % N, (m - 1) % M]

+ field[(n - 1) % N, m]

+ field[(n - 1) % N, (m + 1) % M]

+ field[n, (m - 1) % M]

+ field[n, (m + 1) % M]

+ field[(n + 1) % N, (m - 1) % M]

+ field[(n + 1) % N, m]

+ field[(n + 1) % N, (m + 1) % M]

)

)

|

For the fun of it, I will stick with the “rolled” version to keep comparisons fair and the blog a little shorter. If you are interested in squeezing out every ms of speed, I recommend unrolling as many small loops as possible.

Cython ~ 580 µs

And similarly, for the Cython code from the original blog.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| %%cython

cimport cython

import numpy as np

cimport numpy as np

from libc.math cimport exp

from libc.stdlib cimport rand

cdef extern from "limits.h":

int RAND_MAX

@cython.boundscheck(False)

@cython.wraparound(False)

def cy_ising_step(np.int64_t[:, :] field, float beta=0.4):

cdef int N = field.shape[0]

cdef int M = field.shape[1]

cdef int n_offset, m_offset, n, m

for n_offset in range(2):

for m_offset in range(2):

for n in range(n_offset, N, 2):

for m in range(m_offset, M, 2):

_cy_ising_update(field, n, m, beta)

return np.array(field)

@cython.boundscheck(False)

@cython.wraparound(False)

cdef _cy_ising_update(np.int64_t[:, :] field, int n, int m, float beta):

cdef int total = 0

cdef int N = field.shape[0]

cdef int M = field.shape[1]

cdef int i, j

for i in range(n-1, n+2):

for j in range(m-1, m+2):

if i == n and j == m:

continue

total += field[i % N, j % M]

cdef float dE = 2 * field[n, m] * total

if dE <= 0:

field[n, m] *= -1

elif exp(-dE * beta) * RAND_MAX > rand():

field[n, m] *= -1

|

1

| %timeit cy_ising_step(field)

|

1

| 584 µs ± 5.11 µs per loop (mean ± std. dev. of 7 runs, 1,000 loops each)

|

Cython also has become faster (4x) since 2017.

Numba ~ 1.1ms

@Jake VanderPlas also mentions another blog that used Numba to speed things up. Lets stick with our original implementation and see how far we can get.

Naive @njit ~ 1.1ms

To use basic Numba, we just need to rewrite our update function and add the @njit decorator to the function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| @njit

def numba_ising_step(field, beta=0.4):

N, M = field.shape

for n_offset in range(2):

for m_offset in range(2):

for n in range(n_offset, N, 2):

for m in range(m_offset, M, 2):

total = 0

for i in range(n - 1, n + 2):

for j in range(m - 1, m + 2):

if i == n and j == m:

continue

total += field[i % N, j % M]

dE = 2 * field[n, m] * total

if dE <= 0:

field[n, m] *= -1

elif np.exp(-dE * beta) > np.random.rand():

field[n, m] *= -1

return field

|

1

2

3

4

| # Precompile numba_ising_step

numba_ising_step(field)

# Time the code

%timeit numba_ising_step(field)

|

1

| 1.09 ms ± 5.59 µs per loop (mean ± std. dev. of 7 runs, 1,000 loops each)

|

Although faster than Python, it is still ~2x slower than Cython.

@njit(parallel=True) ~ 650µs

Before trying to speed this up, lets first understand a common exploit of the Ising Model. First, lets extract our outer for-loops and simply keep record of the n’s, ms, and offset.

1

2

3

4

5

6

7

8

9

10

| ns = []

ms = []

offsets = []

for n_offset in range(2):

for m_offset in range(2):

for n in range(n_offset, 10, 2):

for m in range(m_offset, 10, 2):

ns.append(n)

ms.append(m)

offsets.append((n_offset, m_offset))

|

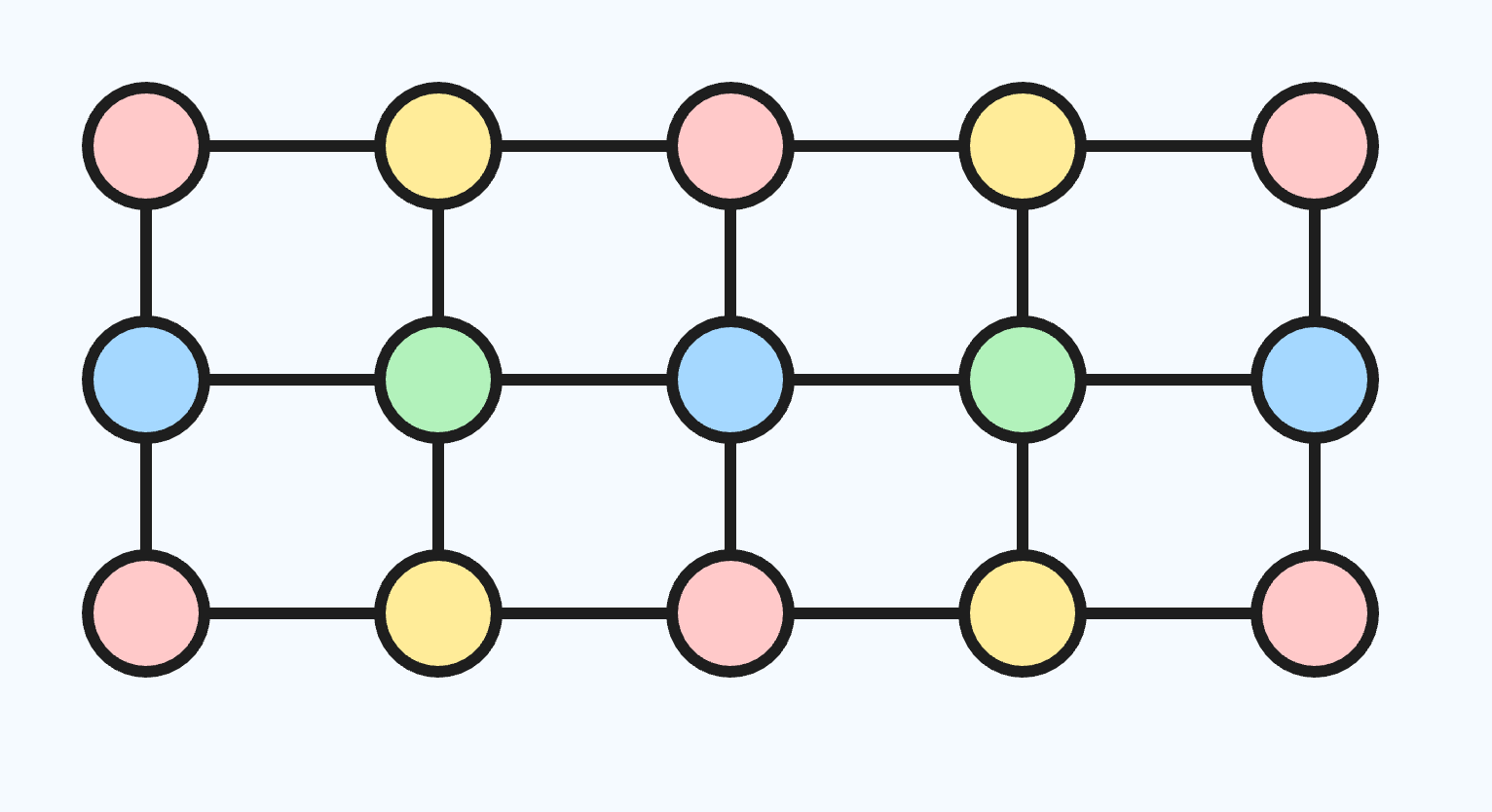

Now, lets plot them colored by their offset. I include the index which is the order of our traversal.

1

2

3

4

5

6

7

8

9

10

| fig = px.scatter(

pd.DataFrame({"n": ns, "m": ms, "offset": offsets}).reset_index(),

x="n",

y="m",

color="offset",

title="Order of n vs. m",

text="index",

)

fig.update_traces(textposition="bottom right")

render_plotly_html(fig)

|

If you click on any one of the offsets, you’ll notice that when that offset disappears, all of its neighbors stay intact. Because the markov blanket of each cell in the Ising Model are the immediate neighbors, this means these update steps are independent, and we can do them in parallel using Numba’s parallel=True.

1

| from numba import prange

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| @njit(parallel=True)

def numba_parallel_ising_step(field, beta=0.4):

N, M = field.shape

for n_offset in range(2):

for m_offset in range(2):

ns = np.arange(n_offset, N, 2)

for n in prange(len(ns)):

n = ns[n]

ms = np.arange(m_offset, M, 2)

for m in prange(len(ms)):

m = ms[m]

total = 0

for i in range(n - 1, n + 2):

for j in range(m - 1, m + 2):

if i == n and j == m:

continue

total += field[i % N, j % M]

dE = 2 * field[n, m] * total

if dE <= 0:

field[n, m] *= -1

elif np.exp(-dE * beta) > np.random.rand():

field[n, m] *= -1

return field

|

1

2

| numba_parallel_ising_step(field)

%timeit numba_parallel_ising_step(field)

|

1

| 643 µs ± 13.4 µs per loop (mean ± std. dev. of 7 runs, 1,000 loops each)

|

prange also has some ability to detect parallel-eligible code itself.

1

| numba_parallel_ising_step.parallel_diagnostics(level=1)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| ================================================================================

Parallel Accelerator Optimizing: Function numba_parallel_ising_step,

/var/folders/1j/7lmy8byj4sv720g4hf67kkmm0000gn/T/ipykernel_41034/3547723262.py

(1)

================================================================================

Parallel loop listing for Function numba_parallel_ising_step, /var/folders/1j/7lmy8byj4sv720g4hf67kkmm0000gn/T/ipykernel_41034/3547723262.py (1)

-------------------------------------------------------------------|loop #ID

@njit(parallel=True) |

def numba_parallel_ising_step(field, beta=0.4): |

N, M = field.shape |

|

for n_offset in range(2): |

for m_offset in range(2): |

ns = np.arange(n_offset, N, 2) |

for n in prange(len(ns)):------------------------------| #3

n = ns[n] |

ms = np.arange(m_offset, M, 2) |

for m in prange(len(ms)):--------------------------| #2

m = ms[m] |

total = 0 |

for i in range(n - 1, n + 2): |

for j in range(m - 1, m + 2): |

if i == n and j == m: |

continue |

total += field[i % N, j % M] |

dE = 2 * field[n, m] * total |

if dE <= 0: |

field[n, m] *= -1 |

elif np.exp(-dE * beta) > np.random.rand(): |

field[n, m] *= -1 |

return field |

------------------------------ After Optimisation ------------------------------

Parallel region 0:

+--3 (parallel)

+--1 (serial, fused with loop(s): 2)

+--2 (serial)

Parallel region 1:

+--0 (parallel)

+--1 (serial)

Parallel region 0 (loop #3) had 1 loop(s) fused and 2 loop(s) serialized as part

of the larger parallel loop (#3).

Parallel region 1 (loop #0) had 0 loop(s) fused and 1 loop(s) serialized as part

of the larger parallel loop (#0).

--------------------------------------------------------------------------------

--------------------------------------------------------------------------------

|

With parallel=True, we are now in the neighborhood of Cython.

1

2

3

4

| # A little magic to automatically write my blog :)

import subprocess

subprocess.run(["jupyter", "nbconvert", "--to", "markdown", "ising_model_speed.ipynb"])

|

Mojo ~ 1.27ms

Mojo is a relatively newcomer to the scientific computing scene, but is quickly gaining popularity.

Mojo codeblocks display as Python since markdown does not yet support Mojo highlighting.

First, we will need to import a variety of items from the standard library.

1

2

3

4

5

6

7

8

| from tensor import Tensor, TensorSpec, TensorShape

from utils.index import Index

from random import rand, random_float64

from math import exp

from benchmark import Report

import benchmark

alias data_type = DType.float32

|

Now, we can rewrite our functions in Mojo. First, we start with the random_spin_field:

1

2

3

4

5

6

7

8

9

| fn random_spin_field(N: Int, M: Int) -> Tensor[data_type]:

var t = rand[data_type](N, M)

for i in range(N):

for j in range(M):

if t[i, j] < 0.5:

t[Index(i, j)] = -1

else:

t[Index(i, j)] = 1

return t

|

Next, the internal _ising_update which takes the summation over the neighbors:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| @always_inline

fn _ising_update(inout field: Tensor[data_type], n: Int, m: Int, beta: Float32 = 0.4) -> None:

var total = SIMD[data_type, 1]()

var shape = field.shape()

var N = shape[0]

var M = shape[1]

for i in range(n - 1, n + 2):

for j in range(m - 1, m + 2):

if i == n and j == m:

continue

total += field[i % N, j % M]

var dE = 2 * field[n, m] * total

if dE <= 0:

field[Index(n, m)] *= -1

elif exp(-dE * beta) > random_float64().cast[field.dtype]():

field[Index(n, m)] *= -1

|

Lastly, we can define the ising_step:

1

2

3

4

5

6

7

8

9

| fn ising_step(inout field: Tensor[data_type]) -> None:

var shape = field.shape()

var N = shape[0]

var M = shape[1]

for n_offset in range(2):

for m_offset in range(2):

for n in range(n_offset, N, 2):

for m in range(m_offset, M, 2):

_ising_update(field, n, m)

|

We can define a small benchmark function.

1

2

3

4

5

6

7

8

9

10

11

12

| @always_inline

fn bench() -> Report:

var N = 200

var M = 200

var field = random_spin_field(N, M)

@always_inline

@parameter

fn ising_step_fn():

ising_step(field)

return benchmark.run[ising_step_fn](max_runtime_secs=5)

|

1

2

3

| var report = bench()

# Print a report in Milliseconds

report.print("ms")

|

1

2

3

4

5

6

7

8

9

10

11

| ---------------------

Benchmark Report (ms)

---------------------

Mean: 1.2608148342977381

Total: 2396.8090000000002

Iters: 1901

Warmup Mean: 1.492

Warmup Total: 2.984

Warmup Iters: 2

Fastest Mean: 1.2608148342977381

Slowest Mean: 1.2608148342977381

|

We see that Mojo runs a little bit slower than Numba without optimization.

1

2

3

4

5

6

| %%python

# A little magic to automatically write my blog :)

import subprocess

subprocess.run(["jupyter", "nbconvert", "--to", "markdown", "ising_model_speed_2.ipynb"])

subprocess.run("sed -i'' -e 's/```python/```python/g' ising_model_speed_2.md", shell=True)

|

Julia ~ 850μs

Lastly, we look at Julia, another member of the LLVM family.

1

2

3

| function random_spin_field(N:: Integer, M:: Integer)::Matrix{Int8}

return rand([-1, 1], N, M)

end

|

1

| random_spin_field (generic function with 1 method)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| function ising_step(field::Matrix{Int8}, beta::Float32, func)::Matrix{Int8}

N, M = size(field)

for n_offset in 1:2

for m_offset in 1:2

for n in n_offset:2:N-1

for m in m_offset:2:M-1

func(field, n, m, beta)

end

end

end

end

return field

end

|

1

| ising_step (generic function with 1 method)

|

Julia translates pretty closely from Python, just take note of 1-indexed arrays instead of 0-indexed arrays.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| function _ising_step(field::Matrix{Int8}, n::Integer, m::Integer, beta::Float32)

total = 0

N, M = size(field)

for i in n-1:n+1

for j in m-1:m+1

if i == n && j == m

continue

end

# Convert to 0-indexing

i -= 1

j -= 1

# Take the remainder and convert back to 1-indexing.

total += field[abs(i % N) + 1, abs(j % M) + 1]

end

end

dE = 2 * field[n, m] * total

if dE <= 0

field[n, m] *= -1

elseif exp(-dE * beta) > rand()

field[n, m] *= -1

end

end

|

1

| _ising_step (generic function with 1 method)

|

1

2

3

4

| N, M = 200, 200

field = random_spin_field(N, M)

ising_step(field, 0.04f0, _ising_step)

println(size(field))

|

1

2

3

| using BenchmarkTools

@btime ising_step(field, 0.04f0, _ising_step)

println("")

|

1

| 853.750 μs (0 allocations: 0 bytes)

|

Which almost runs as fast as Cython

1

| run(`jupyter nbconvert --to markdown ising_model_speed_3.ipynb`)

|

Summary

The naive translation of each language beats Python by ~100x, which is pretty good for basic applications. If you are a fan of Swift, then Mojo will come very naturally since both are syntactic sugar for LLVM. If you come from R, then Julia has similar syntax and emphasis on scientific computing. Numba can be applied with a simple decorator, making it a great first pass attempt on existing code. Cython provides the most speed, but at the highest development cost (IMO).